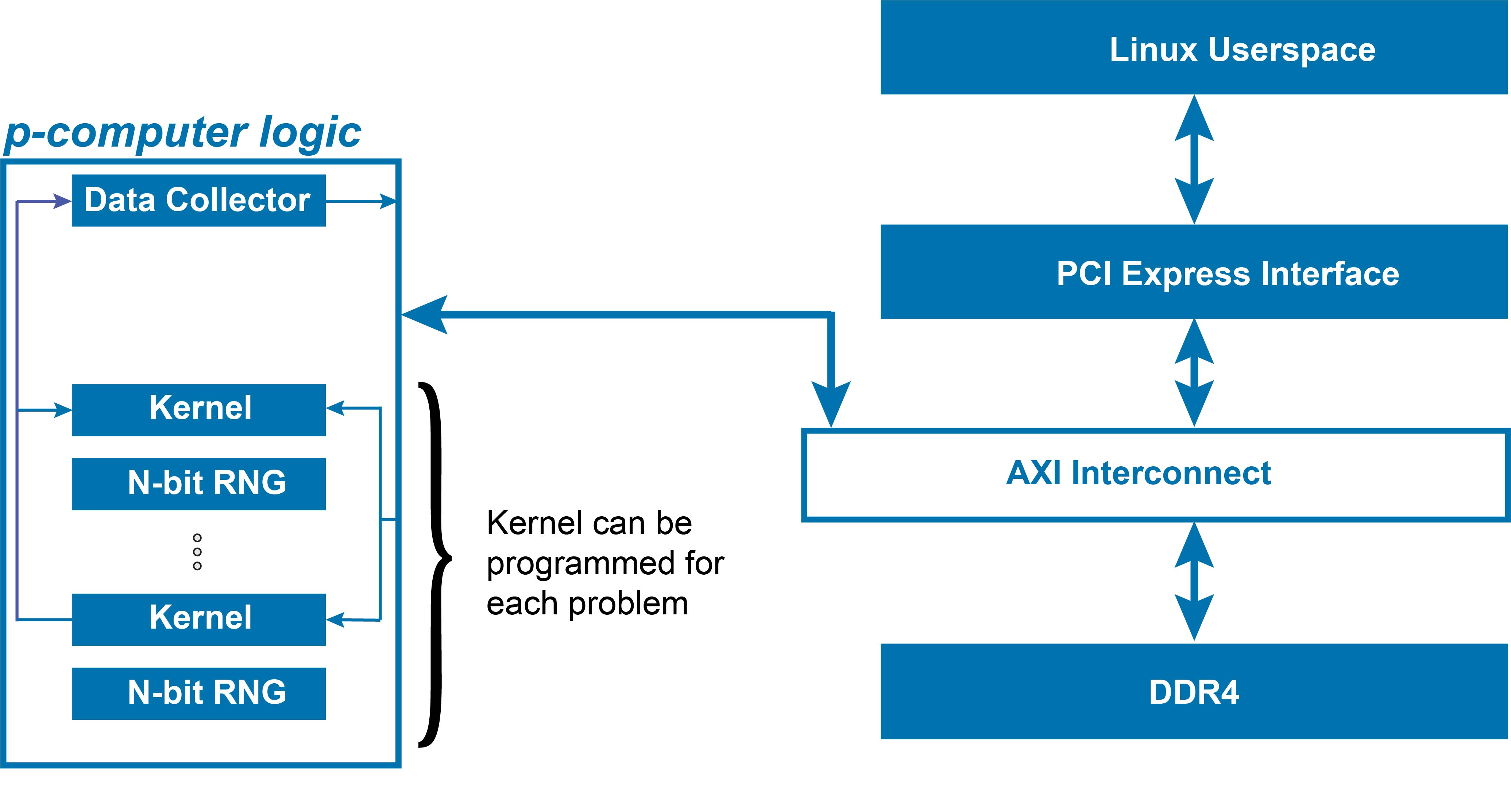

Co-processing on the cloud

Due to the slowing down of Moore's law, domain-specific accelerators are the only way forward to decrease the energy consumption of desired operations and stay in the power budget. Here, AWS cloud FPGAs were utilized to accelerate applications in the deep learning and optimization field by mapping algorithm to hardware designs with high energy efficiency.